Conversion

Conversion Is King. Here’s How To Optimize For It

Conversion optimization is big business.

Who doesn’t want to increase traffic to their site, grow their email list, and drive sales?

Run a search for conversion optimization and you’ll find no shortage of advice, from testing to tactics.

Change your button colors! Alter your font! Move images around!

Sound familiar?

Many think of this as conversion optimization. Businesses waste time and money running these sorts of tests, only to net small (if any) improvements.

This is tweaking, not testing.

People don’t take action because they don’t understand your offer, how it compares with your competitors, or how it’s priced – not because your color scheme is limiting.

The best conversion optimizers have a process-oriented mindset.

They use a systematic approach to find the roadblocks preventing your potential customer from taking your desired action.

Their success comes from hours of time spent on data collection, examining user behavior, hypothesizing, implementation, and constant reiteration.

In this post, I’ll show you the process the best conversion optimizers use to achieve growth and discuss a shortcoming that most testers are guilty of.

Build A Test Hypothesis Using A Framework

Much of the testing we see today is planned at short notice without much forethought.

And for good reason.

Investing 15 minutes to set up a test that brings about 10% increase in conversions is rewarding and makes us feel good. Thanks for the rush, dopamine!

We’re drawn to tests like this one by Performable.

But do tests like these really solve the fundamental issues faced by your users?

Perhaps.

For example, let’s say visitors to your landing page are convinced by your offer but struggle to locate the next step to take.

In this case, changing the button color of your CTA to draw more attention to it makes sense.

However, if users are lost on your offer, the ‘success’ you associate with any result from a test like this does not reflect your full potential for improving conversions.

So why are these ‘random’ tests so popular and common amongst conversion optimizers?

That’s because it’s easy to copy what has worked for others.

Consider this scenario. You read a case study in which a competitor was able to increase sales by 200% by adding multiple CTAs to their landing page.

Believing this to be the secret to success, you set out to replicate their trick.

You believe that since this tactic worked for someone else, it can have the same impact on our own business.

Of course, your version fails to deliver the same results.

Why?

There’s no strategy or business case behind your test.

You’re not entirely to blame for this. Humans have a cognitive bias (the anchoring effect) which leads us to rely on the first piece of information we receive.

It causes us to rush to judgment and make irrational decisions.

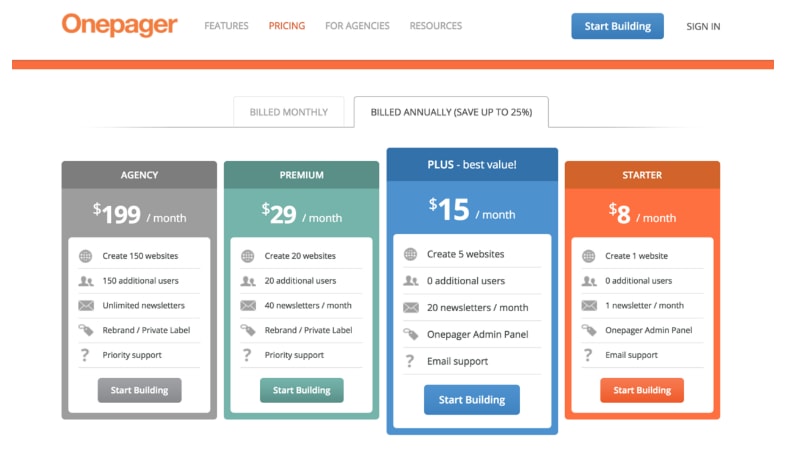

Check out this pricing page from Onepager:

Notice how the first price is the highest?

It makes the offers after it seem like a bargain because our mind has latched on to $199 and compares the other choices against it.

Similarly, we latch on to the first few ideas for testing we come across.

Unfortunately, no two businesses are identical.

Your audience and the challenges it faces are unique.

Blindly following what others are testing won’t work. Your tests should always investigate a roadblock that’s relevant to your business.

When running a test, you should be able to answer these questions:

- Why are you testing X rather than Y?

- What will you measure?

- What stages are visitors who are participating in your test in?

- How long will you run the test?

If you can’t answer all of these questions, follow this process to uncover the pertinent info.

Step 1: Figure out what to test

Half the battle in running a successful experiment is to first figure out what to test.

Like I said, unless you’re testing something that impacts your audience’s ability or motivation to convert, you’ve failed before you’ve started.

So, how do you find choke points along your conversion path?

Through quantitative and qualitative data.

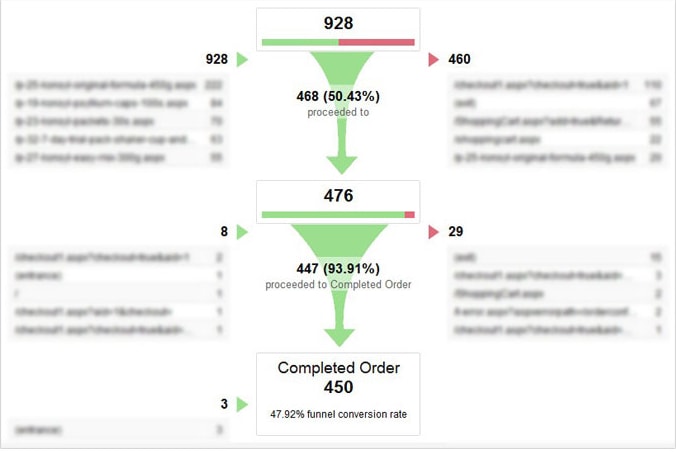

Google Analytics, heat maps, and surveys provide you with detailed insight regarding how people interact with your product. Look for locations where users drop off.

This is a nudge in the right direction toward what to test.

In the example above, it’s clear that most people drop off between step 1 and 2.

In the next few steps, we’ll hone in on how to find and prioritize what to test in this area.

Step 2: Develop a test hypothesis based on data

Now that you’ve found an area that requires attention, we’re going to develop a test hypothesis.

Keep in mind that the reason behind a drop-off isn’t always straightforward.

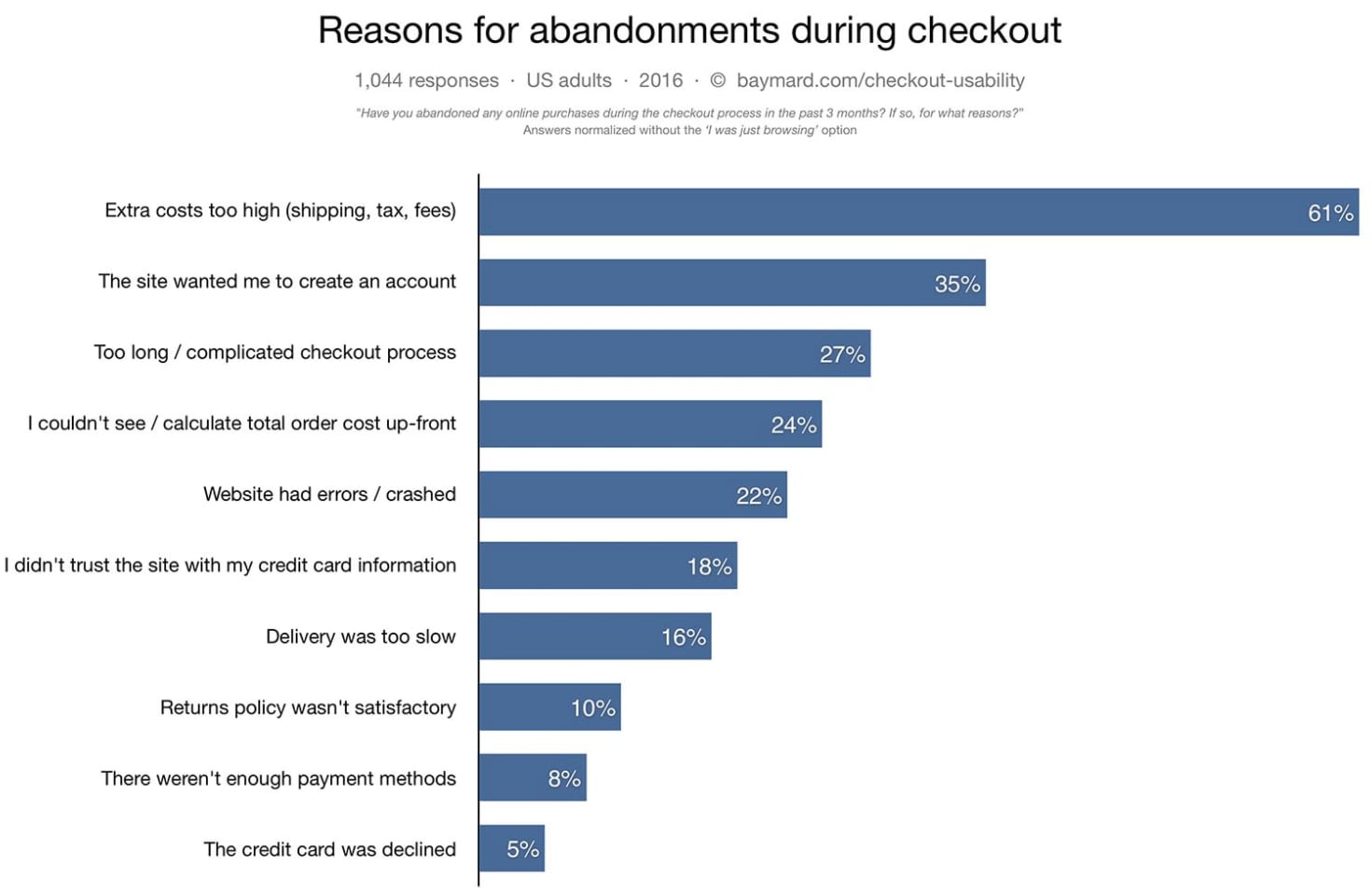

For example, a high cart abandonment doesn’t automatically mean there’s a problem in your checkout flow.

Instead, it could be a result of ‘hidden’ shipping charges or any of these other factors.

To find the reason for cart abandonment on your site, spend time collecting additional data. Surveys and usability tests are just a couple of ways to discover why users abandon their cart.

If you still can’t substantiate a reason to target, use your judgment and make an educated guess.

In the next section, I’ll talk about how you can minimize risk around guesswork.

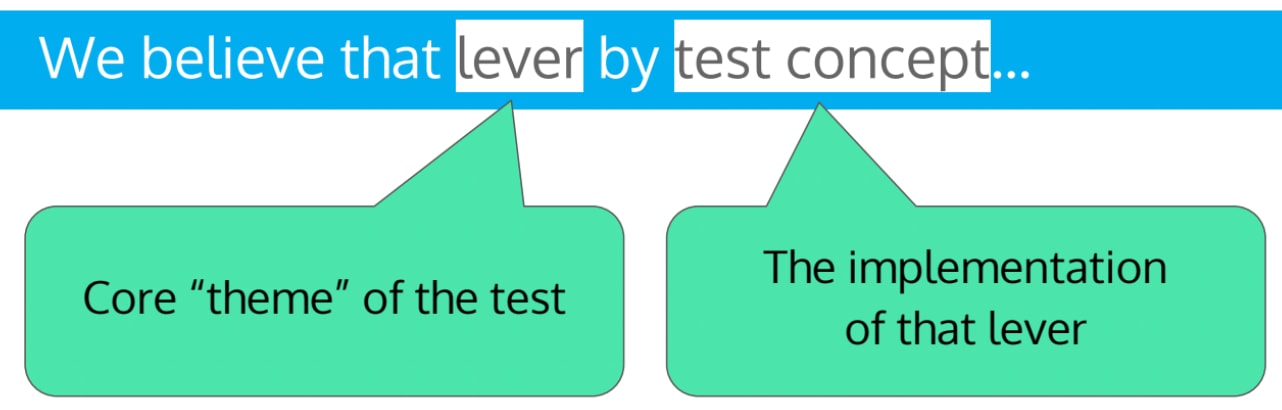

For now, pick a hypothesis and go with it. Make sure you surround your statement around a ‘lever’ and ‘test concept’.

This lever is the foundation of your test.

Use this to state (justify) what you’re going to test. Subsequently, the test concept is meant to support your lever by defining what you plan to change.

When creating a hypothesis for our cart abandonment example, we may frame it like this:

“We believe that displaying shipping charges [lever] by adding them to our product pages [test concept] will increase conversions”.

Why is it important to do this?

If your test fails, we can easily figure out what the flaw is. Either your lever was wrong or how you chose to fix it (test concept) was faulty.

Step 3: Pick your test audience

Your hypothesis from the previous step (while great) is still rudimentary.

We’re going to build it out further.

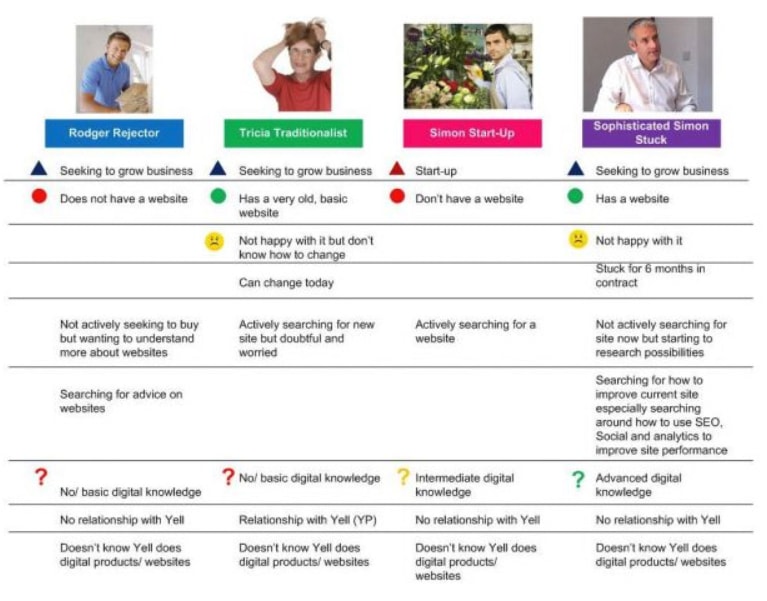

The next step is to identify which audience segment to expose to your test. Visitors to your site are not a homogeneous entity.

Remember your customer personas?

Each persona is a potential test candidate that will react differently.

For example, one visitor may be a potential first-time buyer running Chrome on his laptop whereas another is a repeat customer using Safari on her iPhone.

Who do you expose to your test?

To answer this question, take a look at the pages where most users drop off.

Determine the most common type of user on this page and select this group to be the test variant. Place everyone else in a ‘control group’.

Doing this is important because it’s the only way to confidently assure your changes are doing more good than harm.

Building on our example, we might expand our hypothesis to:

“We believe that displaying shipping charges [lever] to our mobile shoppers [test audience] by adding them to our product pages [test concept] will increase conversions”.

Step 4: Define what success looks like

Last but not least, specify how you’ll measure success and in what time frame.

Pick a key performance indicator (KPI) relevant to the test and stick with it.

Perhaps in our example of displaying shipping charges on the product page, we would measure a change in the percent of page visitors that place an item in the cart. Or perhaps the percent of page visitors that complete a purchase.

If you were adding a more defined CTA to a page, it would be more relevant to measure click-through than sales as there are too many other factors that can affect the customer’s decision to complete the purchase (price, product availability, etc).

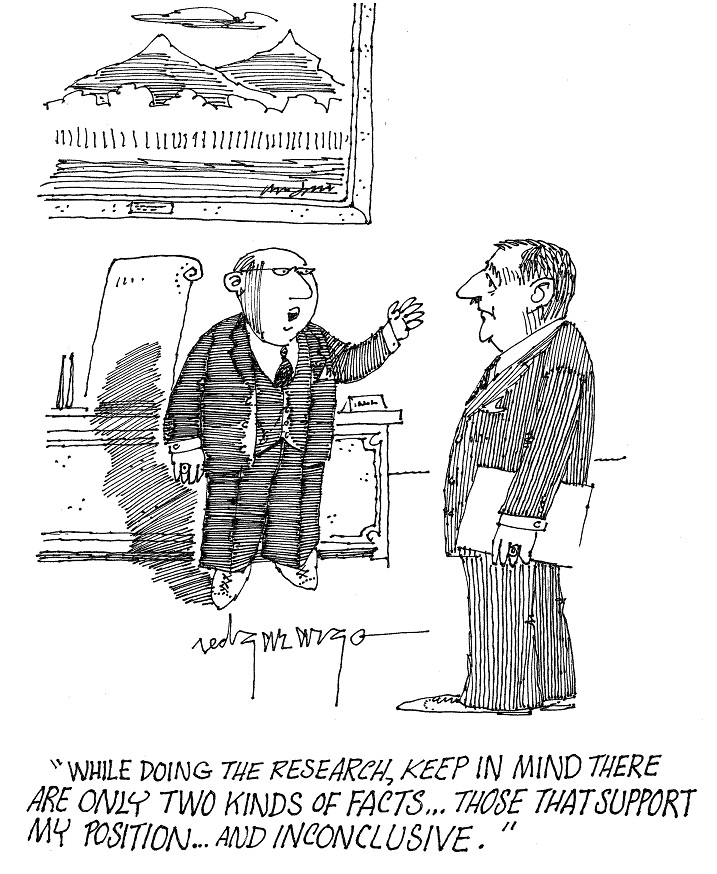

It’s important to define this in advance to avoid cognitive bias – the likelihood that without a pre-defined measurement of success you’ll interpret perceived results in such a way as to match the end result you expected or wanted.

You can’t run a test and retrospectively define how success is measured.

Pre-determine your KPI to keep your strategy on track and relevant to your business goals.

You may be wondering, “Why put in this much work?”

Applying this level of rigor to your testing adds strategic value not achieved when using intuition.

Each test run in this fashion ends up being interlinked to one another:

- Whether your test fails or succeeds, you can use it to inform the direction of your subsequent tests.

- Your results will be quantifiable and you’ll be able to determine the value of one adjustment comparable to another. This is invaluable when determining the cost/benefit of implementing a larger or site-wide change.

Start Small And Build Tests Up

Testing is a time-consuming process.

Besides the time you spend running a test, you also need to invest in its set up. A complicated redesign or copy rewrite cannot be done overnight.

For example, consider this scenario.

You spend two weeks getting a test ready only for it to fail after a one week trial period.

How do you react?

Most likely, you’ll start again from scratch, none the wiser. For a company focused on growth, this is toxic.

You can end up in a series of false starts with no progress. Moreover, it’s demotivating to fail repeatedly.

If only there was a way to know or determine the success of your test hypothesis, without having to invest significant time.

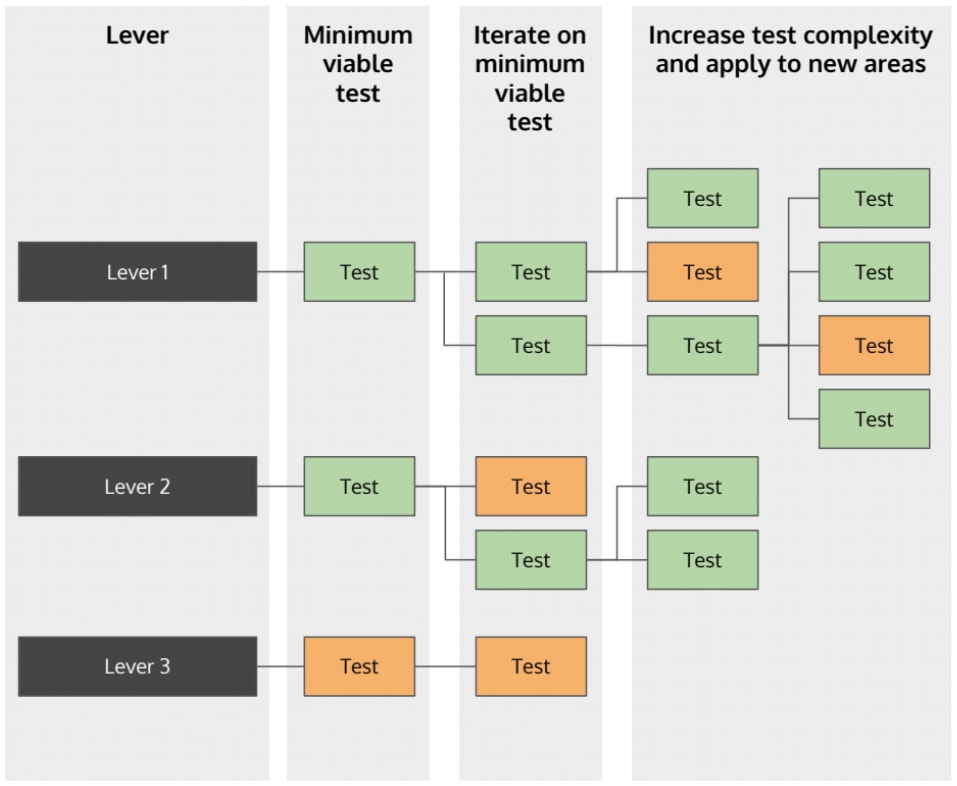

This is possible through a process known as minimum viable testing.

The idea behind this concept is to avoid creating a particularly complicated test. Instead, test the simplest application of your lever and build on it if successful.

For example, let’s say your lever is product pricing strategy.

What would be the minimum viable test here?

You should focus on a few goals when deciding how to implement a minimum viable test:

- Limit the work hours involved in implementing a test.

- Reduce the amount of variables that can skew results.

- Utilize pages with enough traffic to get a reasonable comparison result.

With these goals in mind, a minimal viable test with a level of product pricing would be to change prices on existing product pages that receive a decent amount of traffic but a low conversion.

This test will help you determine whether product pricing has an impact on your audience.

If it doesn’t, you could either eliminate the lever or adjust the test and try again.

I’d suggest running a follow-up test with this lever, however. Additional testing lets you be more confident of its potential for success.

If your minimum viable test does work, repeat the process.

Build on a small change with another small change.

After a few repetitions, your test complexity will increase and you can begin to rope in other departments as you scale testing.

There are a few important factors to keep in mind when setting up your tests:

Compare apples to apples

Make sure that you have a decent baseline to test your results against.

If you’re going to change the price on page, and you plan to compare conversion between the previous price and the new price, make sure that you collect conversion data on the page prior to making changes AND make sure the data you collect is for a number of page visitors equivalent to the time you plan to test.

Minimum traffic for testing

A test that reviews the actions of five – or twenty – site visitors is not stable or informative enough to base permanent changes upon.

The minimum number of page visitors to be considered a viable test group is specific to your site, page traffic and industry.

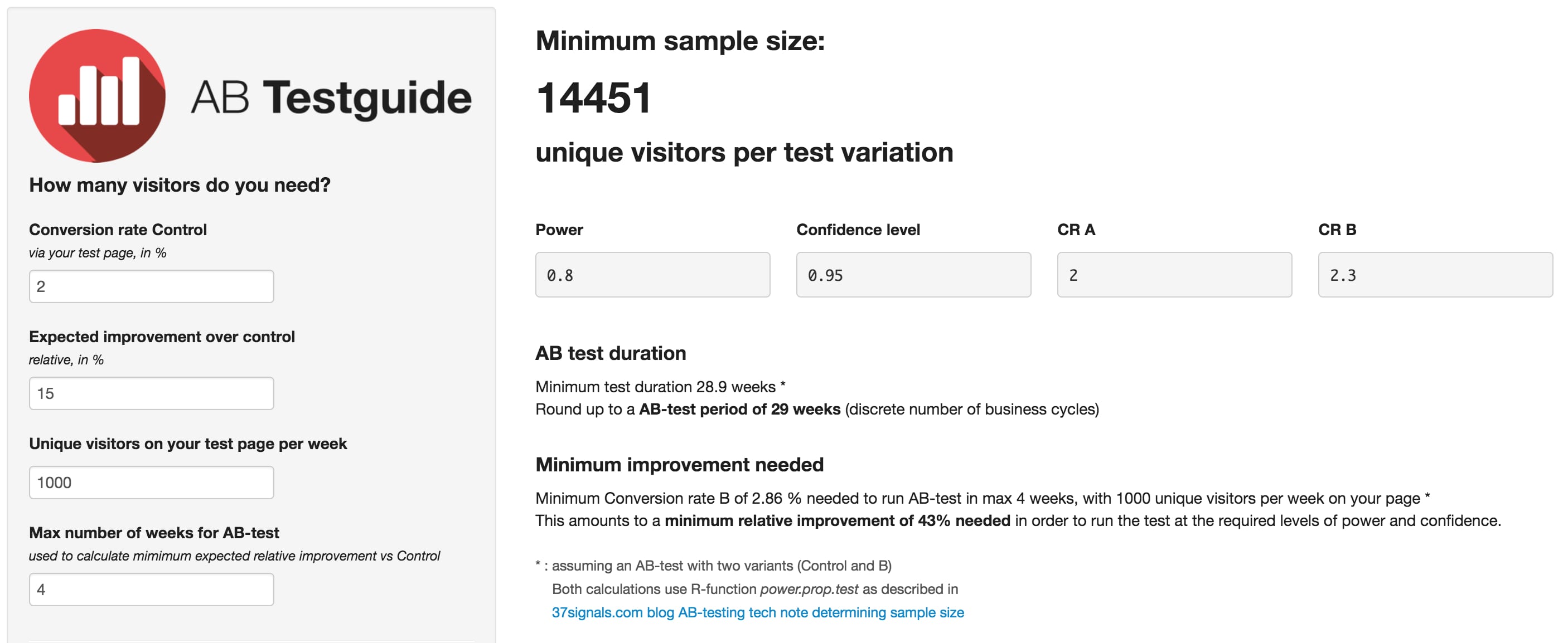

You can use a tool like A/B Test guide to determine how long you will need to run a test to gather enough data to be considered viable.

If a page gets 10,000 or more visitors a month, I would suggest that you should aim for a minimum of 15-20% the total monthly traffic to see the modified page in order to have usable data from your test.

Concurrent Testing

Some may consider running multiple tests on the same page concurrently. Perhaps changing the page layout while also adjusting pricing.

I would suggest that you limit the number of extraneous variables that could skew results.

Aim to make one change to each test group at a time. You could either:

- Run tests back-to-back (for example, first test a price adjustment and then return to the original price and test a value-add offer such as free shipping or a gift with purchase).

- Run a different test on a different page (or page group) with roughly equivalent audience and number of visitors.

More data is always best.

Let’s say you test a price reduction. You get a 15% increase in conversion so you implement price cuts sitewide.

But what if you could have gotten the same conversion bump from offering free shipping and adding more images of the product to the page? Or by allowing visitors to see total cost prior to entering their credit card number?

Wouldn’t increasing your conversions without eating into your profits be the path you’d choose if you had more than one successful test?

Testing Is Not Limited To Your Website

Conversion optimization today is incredibly website-centric.

Both testers and marketers are guilty of this tunnel vision. While important, the customer journey involves more than just the website.

To understand this better, ask yourself this:

How common is it to see a business spend their entire budget on their website?

If anything, you’re more likely to find a larger share of the budget allocated for SEO, PPC, social media, and tv campaigns (to raise awareness).

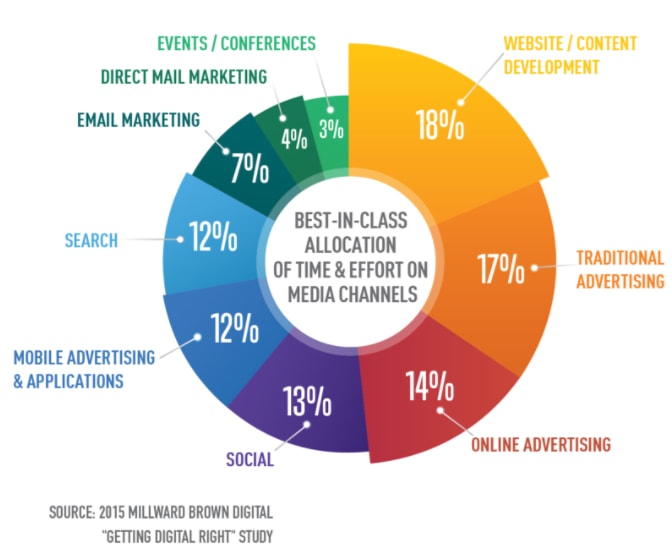

Check out how brands distribute their marketing budget according to this Millard Brown study.

And behind the scenes, companies are hard at work trying to improve their product.

So, why do we focus all our testing efforts on the website?

It’s rare to see anyone apply the same principle of testing to their pricing model, SEO efforts, or email marketing campaigns.

A good tester looks to optimize the entire user journey.

Everything from advertising, content, website design, pricing, and even the product itself is fair game for testing.

Without testing or experimenting on your entire business, you lose inventiveness.

It’s this spirit of testing that’s behind every successful business today.

The largest companies today have found success thanks to relentless testing and an openness to reinvent themselves.

For example, look at Google.

They’ve diversified from a search engine into a technological behemoth with market share in email, office tools, browsers, mobile OS, home automation products and much more.

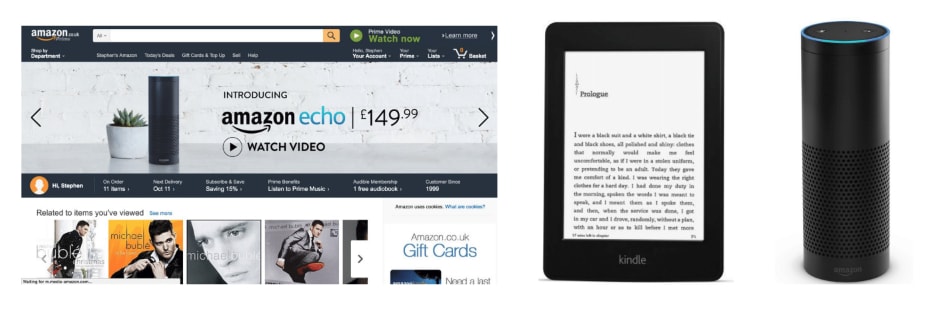

Or how about Amazon.

From being an eCommerce platform, they now have a foot in content streaming, eBooks, and audio entertainment.

They’ve even stepped into the traditional retail storefront and shipping arenas.

The common factor in these behemoths is that are constantly testing consumer interest in new offerings. They test and drop those products or offerings that aren’t successful.

Even businesses outside the tech space carry out testing (in surprising ways).

Ever come across an unusual item on the McDonald’s self-serve menu only to find it unavailable when you click on it?

You’ve just logged your vote for a new item on McDonald’s future menu.

And they aren’t alone.

Automated menu boards and kiosks allow restaurants to rapidly test offerings to certain customer demographics, test the effectiveness of offering up-sales or suggesting add-ons, and much more.

This is not to say you should altogether avoid testing your website. By all means, test your website to ensure user flow is optimized but don’t make it your only focus.

You may ask how to do this if your entire business is based online (SaaS)?

Why not test your pricing page? Or a new feature within your product?

Don’t confine yourself to color schemes, copy, buttons, or image placement.

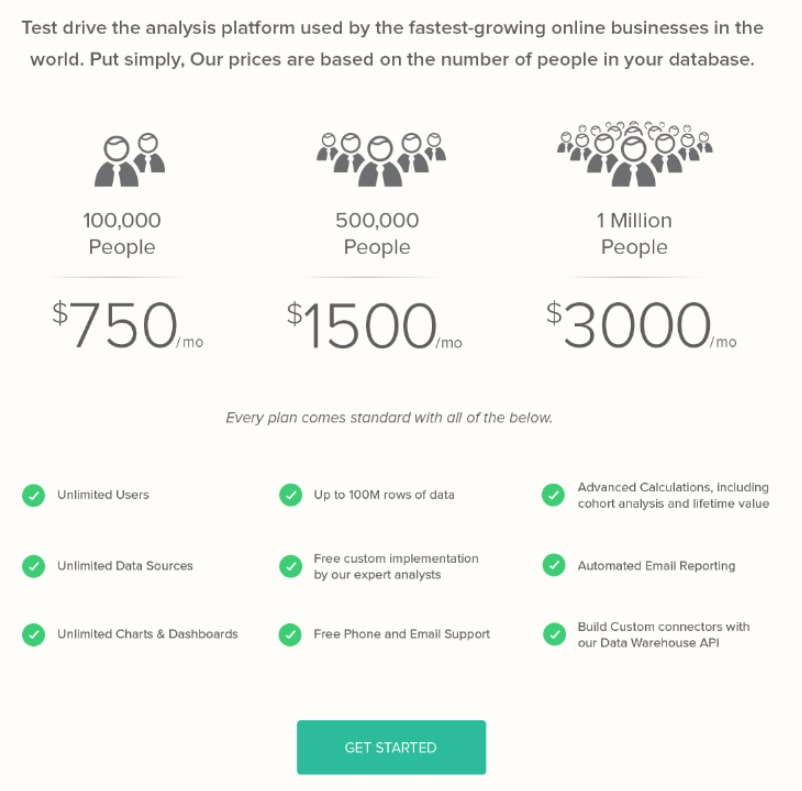

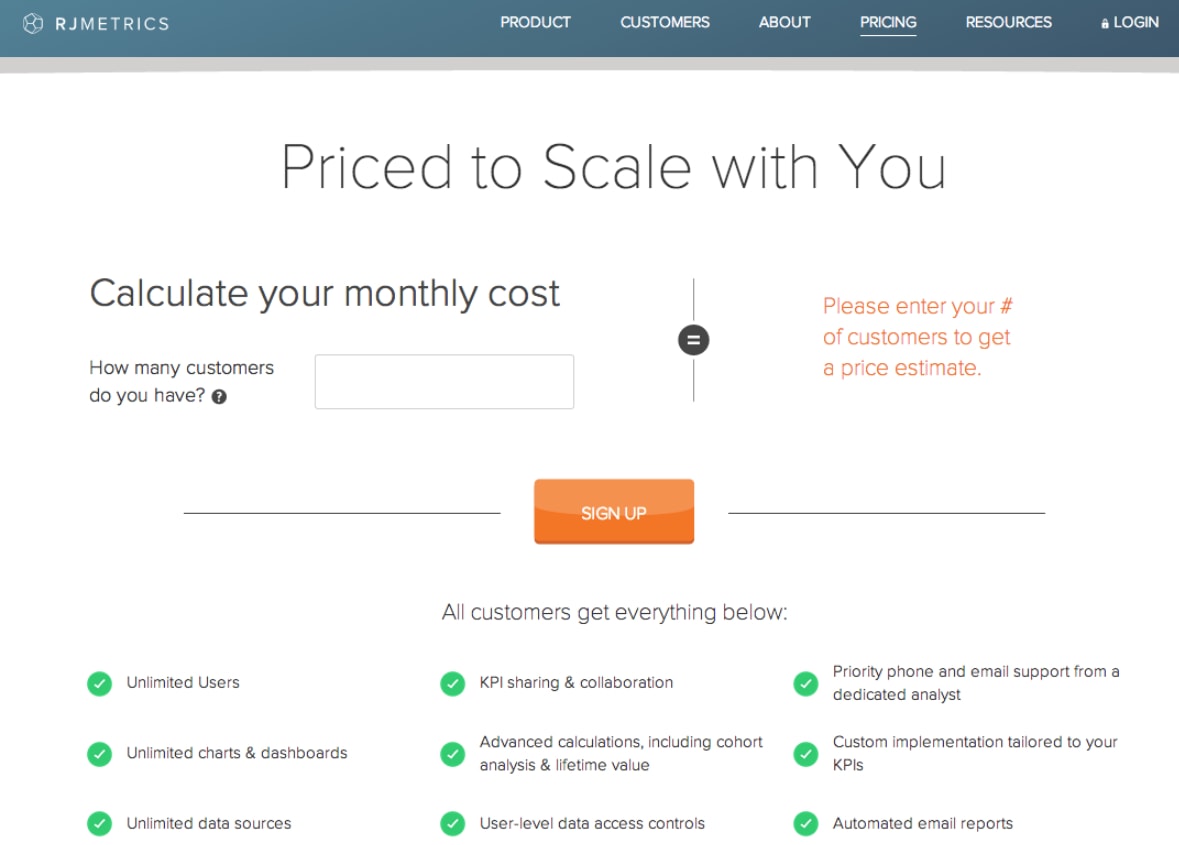

For example, let’s take a look at RJMetrics. The analytics firm offered 3 different plans based on the number of customers a client had on their database.

Here’s their original pricing page:

There was a fear that potential customers would not be able to relate to the rigid options.

If a prospect did not have close to 100k, 500k, or 1 million leads or customers, they would not be able to justify the cost.

So they revamped their page to be more interactive:

The result?

Some SaaS businesses use split testing to try out new product features or revamp design. The next example comes from help desk software Groove.

By speaking to customers on the phone, they were able to transform their homepage using actual phrases customers could identify with. No surprise then that led to an increase in conversions.

Conclusion

There’s no denying that testing is an engine for long-term growth.

However, testing can only do that if you treat it as a process rather than an activity. The best testers experiment on every aspect of the business to improve the user experience.

Fiddle around with button colors at your own risk.